Who would you think are your most loyal and dedicated visitors? Who always return for more of your website content and always looks through all your pages? That’s exactly right – crawling bots. Bot requests create an additional load to your server and can slow it down significantly. We’ve screened hundreds of servers to make a list of “good” search bots that help you to increase online visibility and “bad” crawlers that add no value. Managing and optimizing your website for these search engine bots is crucial for maintaining optimal server performance and improving overall user experience.

Bad crawling bots

The listed crawling bots are not necessarily harmful. You can consider them as “Bad robots” due to its requests volume which eats too much server resources and bandwidth. They also are suspected to ignore the robots.txt directives and proceed to the website scan. Nevertheless, blocking them is not a must if you have a strong server and want to contribute your website information and content to the analytics aggregators. If not restricted to access your website, these bots tend to obey the delays command in robots.txt.

User-agent: MJ12Bot 👎

Majestic is a UK based specialist search engine used by hundreds of thousands of businesses in 13 languages and over 60 countries to paint a map of the Internet independent of the consumer-based search engines. Majestic also powers other legitimate technologies that help to understand the continually changing fabric of the web.

User-agent: PetalBot or AspiegelBot 👎

PetalBot is an automatic program of the Petal search engine. The function of PetalBot is to access both PC and mobile websites and establish an index database that enables users to search the content of your site in the Petal search engine.

User-agent: AhrefsBot 👎

AhrefsBot is a powerful Web Crawler designed to enhance the functionality of the Ahrefs online marketing toolset. This advanced bot is responsible for maintaining a vast 12 trillion link database, ensuring that Ahrefs users have access to the most comprehensive and up-to-the-minute data for their SEO needs.

User-agent: SEMrushBot 👎

SEMrushBot is the search SEO bot software that SEMrush sends out to discover and collect new and updated web data. Data collected by SEMrushBot is used in the reports researches and graphs.

User-agent: DotBot 👎

DotBot is our web crawler used by Moz.com. The data collected through DotBot is surfaced on this site, in Moz tools, and is also available via the Mozscape API.

User-agent: MauiBot 👎

An unidentified bot scanning the websites around the globe hosted on Amazon servers – that’s pretty much everything known about it by the most webmasters. It is usually being blocked to avoid an enormous volume of requests it does. If you have more information about this bot, do not hesitate to share it with the online community, it will be highly appreciated.

Good crawling bots

Good bots typically belong to search engines, known as search bots. They read all your content to show it in the search results. They always introduce themselves and never neglect robots.txt commands. Make sure you don’t ever block them on the root level. Otherwise, forget about organic traffic.

User-agent: Googlebot 👍

Googlebot is Google’s web crawling bot. Googlebot’s crawl process begins with a list of webpage URLs, generated from previous crawl processes and augmented with Sitemap data provided by webmasters. As Googlebot visits each of these websites it detects links (SRC and HREF) on each page and adds them to its list of pages to crawl. New sites, changes to existing sites, and dead links are noted and used to update the Google index.

User-agent: Bingbot 👍

Bingbot is a standard Bing crawler and handles most of their crawling needs each day. Bingbot uses a couple of different user agent strings which include several mobile variants with which we crawl the mobile web.

User-agent: Slurp 👍

Slurp is the Yahoo Search robot for crawling and indexing web page information. Although some Yahoo Search results are powered by their partners, sites should allow Yahoo Slurp access in order to appear in Yahoo Mobile Search results. The bot also collects content from partner sites for inclusion within sites like Yahoo News, Yahoo Finance, and Yahoo Sports.

User-agent: DuckDuckBot 👍

DuckDuckBot is the Web crawler for DuckDuckGo, a search engine that has become quite popular lately as it is known for privacy and not tracking you. It now handles over 12 million queries per day. The bot helps to connect consumers and businesses.

User-agent: YandexBot 👍

YandexBot is the web crawler to one of the largest Russian search engines, Yandex, which generates over 50% of all search traffic in Russia. Yandex has several types of robots that perform different functions.

How to control crawlers?

You have two ways to control bots activity – with robots.txt or on the server level.

Robots.txt

This is the common way that will be enough in most cases. The restriction to crawl the entire website will look like this:

User-agent: Bad_bot_name

Disallow: /If you want to disallow a certain directory add next:

User-agent: Bad_bot_name

Disallow: /directory_name/Use Crawl-delay directive

The crawl-delay the directive is an unofficial directive meant to communicate to crawlers to slow down crawling in order not to overload the webserver. Some search engines don’t support this directive and have their settings in the personal area 🙁

Crawl-delay: 1Server

If you see that bots ignore robots.txt and continue loading your server, you are on the right way. They demonstrate a disrespectful behavior. Keep on blocking them completely they demonstrate a disrespectful behavior.

If you are using Apache, block bots with htaccess in the virtual host configuration section. If you are using NGINX, apply nginx.conf.

Important! Configuring server might be complicated and done incorrectly can be useless or harmful. We would recommend asking your hosting provider to configure bot blockade for you the right way.

Conclusion

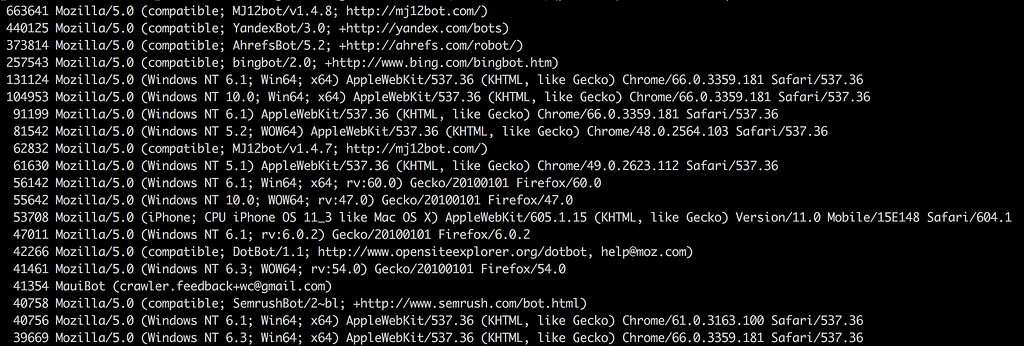

This crawler list is far away from being complete and aims to attract your attention to the subject of bots control as this can increase your server performance and in some cases save your hosting spendings. For the extended “Bad bot list” you can visit Bot Reports website. Review your logs and sort out the bots that help your website grow from those that put spokes in the wheels.

Contact us if you need assistance in dealing with Bad Bots.